In this post, let’s learn about API security in the AI era. Since APIs are now fundamental to all kinds of digital interactions, ensuring their safety has never been more important. Standard, rule-based security measures are no longer sufficient in light of the ever-changing and intricate nature of cyber threats. Because they rely on a set of static rules and heuristics, these solutions aren’t up to the task of protecting APIs against new and emerging risks.

The criminals are getting smarter at using automated techniques like large language models (LLM). Therefore, we need smarter, more adaptable, and more flexible security solutions like those offered by artificial intelligence (AI) and machine learning (ML).

The current challenges in API security

- Advanced attacks

Cybercriminals are becoming more crafty, and they often use stealth tactics to avoid traditional security measures. These kinds of attacks may gradually take advantage of APIs in a subtle way, making rule-based systems unable to identify them. AI can provide stronger protection against these sophisticated attacks since it can detect even small changes from the norm.

- Preventive security

Countering risks after they have already happened is a common characteristic of traditional security methods. On the other hand, AI and ML make preemptive measures possible. These technologies can analyze both past and present data to foretell possible assaults and make preventive measures possible.

- Scale and complexity

There are hundreds, or even thousands, of APIs that modern businesses may use. Manually counting APIs and detecting suspicious activity or security breaches are both made more difficult by the large number of APIs and the relationships between them. This massive undertaking is a perfect fit for the computational power of AI and ML.

- Emerging risks

The nature of cyber threats has evolved. As hackers come up with new tactics, adjust to security measures, and find holes that were previously unnoticed, they are always evolving. When faced with these rapidly evolving strategies, traditional security solutions often fall behind. To deal with this change, ML models work effectively because they can adapt to new data and learn from previous mistakes.

- Optimizing resources

Artificial intelligence and machine learning have the potential to automate several facets of API security, including the identification and mitigation of threats. By eliminating mundane tasks, security teams are free to concentrate on high-level projects and risks that require human knowledge and experience.

Also read AI’s role in reshaping project management for developers

The application of API security in the AI era

A new age of improved defenses and optimal performance has dawned with the incorporation of AI into API development and security. Some of its most important uses are as follows:

- Examining behavior

In order to identify bad actors or hacked accounts, AI profiles normal user behavior and then indicates any differences. In terms of enhancing results for fraud prevention and threat detection, this is very useful.

- Natural language processing (NLP) for query analysis

Natural language processing (NLP) optimizes and protects user requests for APIs that handle inputs from users. NLP can help with cybersecurity processes because APIs send a lot of different kinds of data. It can help protect against breaches, find them, and figure out their size and scope.

- Access control in real-time

Using IAM, authorization, context from tokens, user agents, and IP ASN, artificial intelligence modifies access rights on APIs using order to define user behavior and risk. This aids in promptly controlling potential risks.

- Anomaly detection

In order to detect security breaches, AI models sift through massive amounts of API traffic data.

- Rate-limiting and throttling

The application of AI allows for the dynamic adjustment of rate limitations. This optimizes performance for legitimate users and discourages malevolent actors.

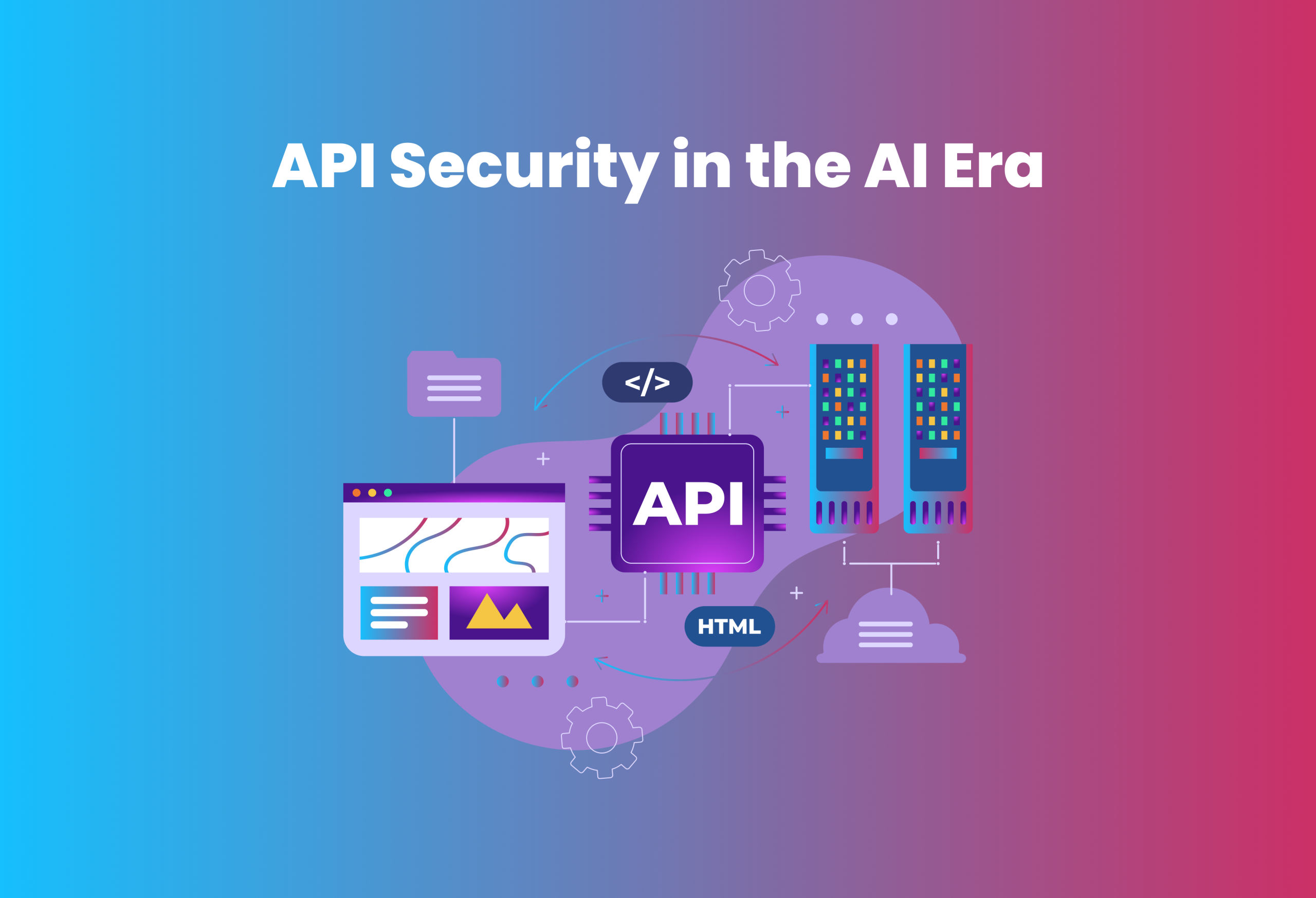

- Automated penetration testing

By simulating cyberattacks on APIs, AI technologies may identify weaknesses and implement preventative security measures. In the never-ending fight against API misuse, this preventative measure might help firms remain ahead of the curve.

- Predictive analysis

AI’s capacity to sift through vast amounts of data allows for the foresight and mitigation of possible security risks.

- API design assistance

Developers may benefit from AI-powered tools that recommend the best ways to organize APIs and handle data, as well as implement security measures throughout the API lifecycle.

- Automated response systems

Authentication, barring users, and notifying security staff are all actions that AI may automate upon threat discovery.

The use of AI and ML in API security in the future

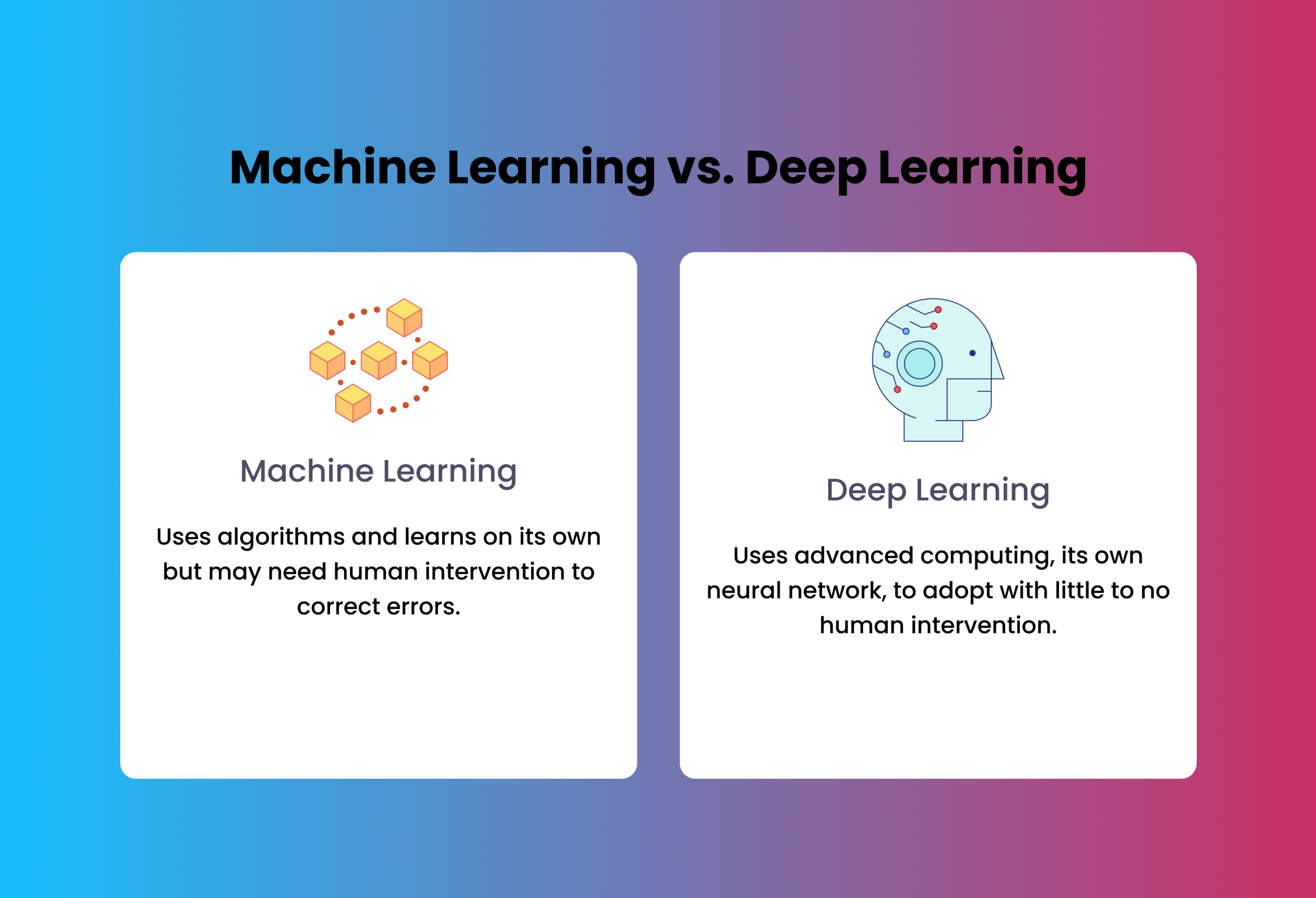

From what we can see, incorporating AI and ML into API security isn’t a fad—it’s the way forward. We should expect to rely increasingly more on these technologies in the future, especially when new methods emerge to make them even more successful. Deep learning is one of these new methods. It’s a subfield of machine learning that relies heavily on ANNs. By simulating the way the human brain processes information, deep learning algorithms are able to learn at a deeper level. Text, pictures, and audio are all examples of unstructured data, yet these algorithms can still find patterns and connections in them.

By studying API traffic patterns and spotting tiny irregularities that could otherwise go unreported, deep learning has the potential to greatly enhance API security. Monitoring API traffic patterns might accomplish this. Furthermore, large language models (LLM), which are deep learning models, will further improve security results. Using self-supervised learning, LLMs train neural networks with billions of parameters on unusually large volumes of unlabeled data. In order to swiftly identify and mitigate possible risks, LLM models may generate queries for malware research and detection technologies like YARA.

Federated Learning

Federated learning is another fascinating field. It’s a distributed machine learning strategy that lets you train your models across several decentralized devices or servers that have local data samples. There are two fundamental advantages to this approach. First, it allows learning from multiple data sources, which results in more accurate and resilient models. And second, it removes the need to communicate raw data, which maintains data privacy.

With federated learning, decentralized threat intelligence might be possible for API security. In this scenario, each API node would learn from its own experiences and then share what it had learned with the rest of the network, making for a more intelligent and adaptable protection system overall.

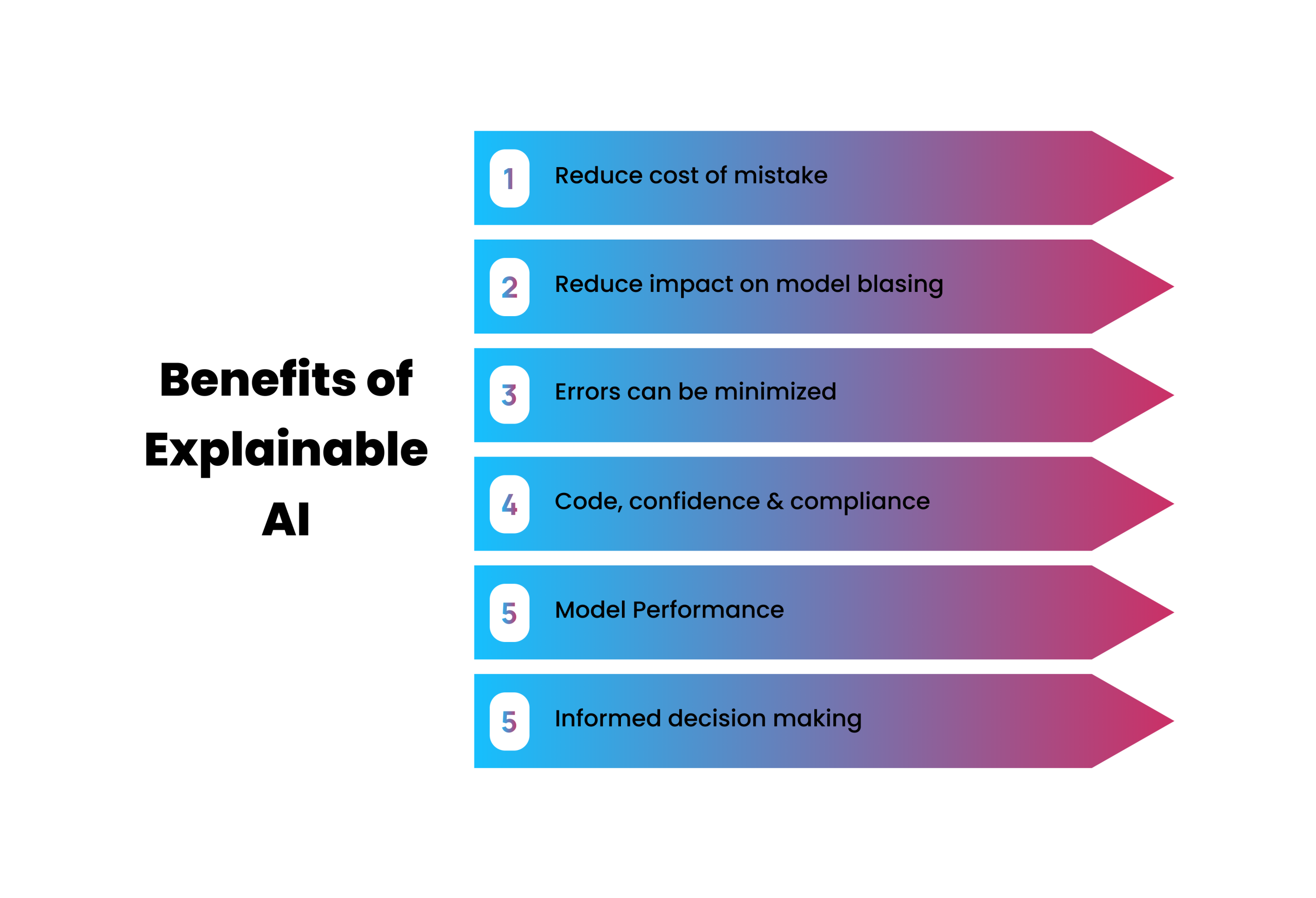

The emergence of Explainable AI (XAI)

A new development that might significantly alter the course of events is explainable AI (XAI). The goal of XAI is to eliminate the ‘black box’ aspect of AI systems by making their judgments more visible and comprehensible to humans. Improve false positive rates, get a better awareness of the threat environment, and fine-tune security measures in API security by understanding why an AI system labels a certain action as suspicious.

There will still be a need for well-designed APIs, frequent security assessments, and well-informed human decision-making, even with these promising new advancements. Nonetheless, they will provide robust resources to supplement and improve upon these essential safeguards, guaranteeing the safety of our digital future.