How Is Generative AI Shaping Software Development

AI is changing the world. The other question is: How is generative AI shaping software development? Indeed, it’s changing the world of developers too. But how will AI affect developers? Is AI going to be the end of developers’ work? All these speculations intensified in November 2022, with the much-anticipated release of ChatGPT. It surely took over the world and became the talk of the town. Some raised questions about the impact of similar large language models.

With the takeover of generative AI, organizations had to reconsider how they functioned and what could and should be changed. Organizations are particularly concerned about the influence of generative AI on software development. Of course, the promise of generative AI in software development is intriguing. But does that mean it’s risk-proof?

Members of VMware’s Tanzu Vanguard group, who are professional practitioners in a variety of sectors, explain how technologies like generative AI are influencing software development and technology decisions. Use their findings to help evaluate your organization’s AI investments.

How generative AI is shaping software development?

Could AI replace developers? As revolutionary as generative AI is, it will not replace developers. Rather, AI contributes by providing a level of software development speed that didn’t exist before. It boosts developer productivity and efficiency by allowing developers to generate code more quickly. Solutions such as the ChatGPT chatbot, as well as tools such as Github Co-Pilot, can assist developers in focusing on providing value rather than writing boilerplate code. It expands the options for what developers may achieve with the time they save by serving as a multiplier of developer productivity. Despite its intelligence and benefits for pipeline automation, the technology is still a long way from totally replacing human developers.

Generative AI should not be viewed as capable of working freely. It still requires supervision, both for ensuring accurate code and for security. According to Thomas Rudrof, DevOps Engineer at DATEV eG, developers must still be able to grasp the context and meaning of AI’s replies because they are not always right. Rudrof feels that AI is better suited to aiding with simple, repetitive activities and acting as a supplement rather than a replacement for the developer function.

Are there risks associated with AI in software development?

Despite its capacity to make developers more productive, generative AI is not error-free. Since developers must still carefully check any AI-generated code, finding and fixing errors may be more challenging with AI. Lukasz Piotrowski, a developer at Atos Global Services, claims something important. He says that there is also more risk involved with software development because it adheres to the accessible dataset and the logic set forth by someone else. As a result, the technology will only be as good as the data given.

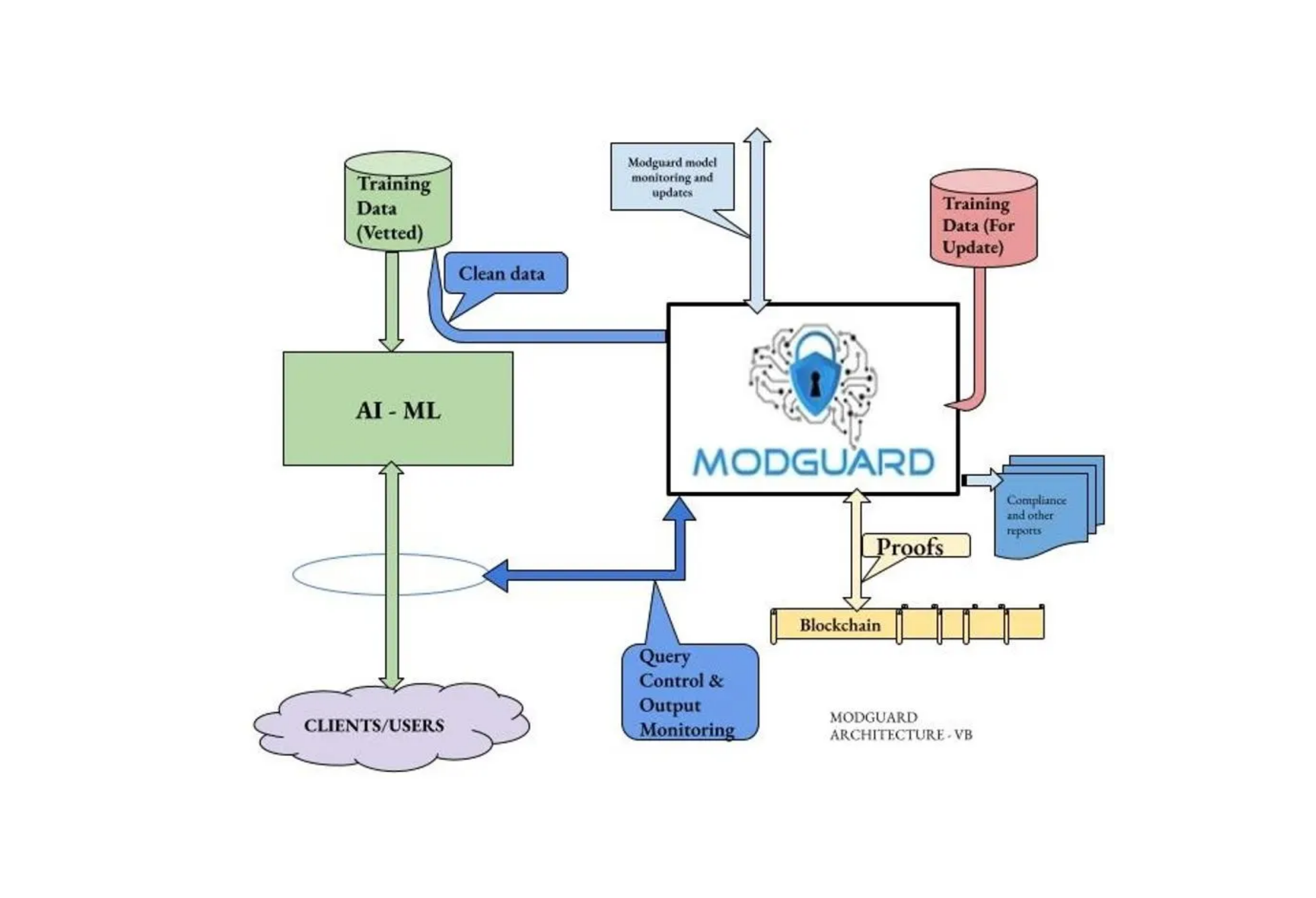

Individually, AI raises security concerns because attackers will attempt to exploit the capabilities of AI tools, while security experts will use the same technology to guard against such assaults. Developers must be exceedingly cautious to adhere to standard practices and avoid directly incorporating credentials and tokens into their code. Nothing secure, including an IP address that might be exposed to other users, should be submitted. Even with precautions in place, AI has the potential to breach security. According to Jim Kohl, Devops Consultant at GAIG, if care is not exercised in the intake phase, there might be tremendous hazards if that security scheme or other information is unintentionally passed to generative AI.

What are the best practices for using AI in software development?

There are currently no best practices for utilizing AI in software development. Many businesses are currently experimenting with AI-generated code due to various concerns, such as its influence on security, copyright, data privacy, and other issues.

However, businesses that are currently utilizing AI must exercise caution and should not blindly trust the technology. According to Juergen Sussner, Lead Cloud Platform Engineer at DATEV eG, firms should strive to create tiny use cases and thoroughly evaluate them; if they work, scale them; if not, consider another use case. Organizations can assess the dangers and limitations of technology through modest tests.

Guardrails are required while using AI and can assist humans in securely using the technology. If your business chooses to ignore AI adoption, it might cause security, ethical, and legal problems. In fact, some organizations have already faced harsh consequences around AI tools for research and coding, so responding promptly should be a priority. For example, corporations have been sued for training AI algorithms using data lakes containing thousands of unauthorized works.

So what’s the main issue that businesses face when employing AI in software development? Well, according to Scot Kreienkamp, Senior Systems Engineer at La-Z-Boy, the issue is getting an AI to grasp context. This is why engineers must understand how to frame AI prompts. This is why you need to get your engineers to learn this skill set. You can do so with educational programs and training courses. If you are serious about AI technology, you need to train qualified staff to be better at prompt engineering.

Wrapping up

Right now, organizations are grappling with the implications of generative AI while a major change in software development is taking place. Developers have begun to use the technology and, at the very least, have become more efficient at coding and establishing software platform foundations. Also, as we have established earlier, AI is not going to replace developers. Rather, AI will require the assistance of a human operator. It’s not reliable or trustworthy on its own. As VMware’s Vanguards state, it’s important to carefully integrate and maintain guardrails to prevent risk in software development.